By Sherwood, Keith and Craig Idso

In his 26 April 2007 testimony before the Select Committee of Energy Independence and Global Warming of the U.S. House of Representatives entitled “Dangerous Human-Made Interference with Climate,” NASA’s James Hansen stated that life in alpine regions is” in danger of being pushed off the planet” in response to continued greenhouse-gas-induced global warming. Why? Because that’s what all the species distribution models of the day predicted at that time. Now, however, a set of new-and-improved models is raising some serious questions about Hansen’s overly zealous contention, as described in a “perspective” published in Science by Willis and Bhagwat (2009).

The two researchers - Kathy Willis from the UK’s Long-Term Ecology Laboratory of Oxford University’s Centre for the Environment, and Shonil Bhagwat from Norway’s University of Bergen - raise a warning flag about the older models, stating “their coarse spatial scales fail to capture topography or ‘microclimatic buffering’ and they often do not consider the full acclimation capacity of plants and animals,” citing the analysis of Botkin et al. (2007) in this regard.

As an example of the first of these older-model deficiencies, Willis and Bhagwat report that for alpine plant species growing in the Swiss Alps, “a coarse European-scale model (with 16 km by 16 km grid cells) predicted a loss of all suitable habitats during the 21st century, whereas a model run using local-scale data (25 m by 25 m grid cells) predicted persistence of suitable habitats for up to 100% of plant species [our italics],” as was shown to be the case by Randin et al. (2009), and as we describe in a bit more detail in our Editorial of 23 Sep 2009. In addition, the two Europeans note that Luoto and Heikkinen (2008) “reached a similar conclusion in their study of the predictive accuracy of bioclimatic envelope models on the future distribution of 100 European butterfly species,” finding that “a model that included climate and topographical heterogeneity (such as elevational range) predicted only half of the species losses in mountainous areas for the period from 2051 to 2080 in comparison to a climate-only model.”

In the case of the older models’ failure to consider the capacity of plants and animals to acclimate to warmer temperatures, Willis and Bhagwat note that “many studies have indicated that increased atmospheric CO2 affects photosynthesis rates and enhances net primary productivity - more so in tropical than in temperate regions - yet previous climate-vegetation simulations did not take this into account.” As an example of the significance of this neglected phenomenon, they cite the study of Lapola et al. (2009), who developed a new vegetation model for tropical South America, the results of which indicate that “when the CO2 fertilization effects are considered, they overwhelm the impacts arising from temperature,” so that “rather than the large-scale die-back predicted previously, tropical rainforest biomes remain the same or [are] substituted by wetter and more productive biomes.” This phenomenon is described in considerably more detail in our recent book (Idso and Idso, 2009), where we additionally review the findings of many studies that demonstrate the tremendous capacity for both plants and animals to actually evolve on a timescale commensurate with predicted climate change in such a way as to successfully adjust to projected warmer conditions.

“Another complexity,” however, as Willis and Bhagwat describe it, is the fact that “over 75% of the earth’s terrestrial biomes now show evidence of alteration as a result of human residence and land use,” which has resulted in “a highly fragmented landscape” that has been hypothesized to make it especially difficult for the preservation of species. Nevertheless, they report that Prugh et al. (2008) have “compiled and analyzed raw data from previous research on the occurrence of 785 animal species in >12,000 discrete habitat fragments on six continents,” and that “in many cases, fragment size and isolation were poor predictors of occupancy.” And they add that “this ability of species to persist in what would appear to be a highly undesirable and fragmented landscape has also been recently demonstrated in West Africa,” where “in a census on the presence of 972 forest butterflies over the past 16 years, Larsen [2008] found that despite an 87% reduction in forest cover, 97% of all species ever recorded in the area are still present.”

Clearly, the panic-evoking extinction-predicting paradigms of the past are rapidly giving way to the realization they bear little resemblance to reality. Earth’s plant and animal species are not slip-sliding away - even slowly - into the netherworld of extinction that is preached from the pulpit of climate alarmism as being caused by CO2-induced global warming.

Sherwood, Keith and Craig Idso

References

Botkin, D.B., Saxe, H., Araujo, M.B., Betts, R., Bradshaw, R.H.W., Cedhagen, T., Chesson, P., Dawson, T.P., Etterson, J.R., Faith, D.P., Ferrier, S., Guisan, A., Hansen, A.S., Hilbert, D.W., Loehle, C., Margules, C., New, M., Sobel, M.J. and Stockwell, D.R.B. 2007. Forecasting the effects of global warming on biodiversity. BioScience 57: 227-236.

Idso, C.D. and Idso, S.B. 2009. CO2, Global Warming and Species Extinctions: Prospects for the Future. Science and Public Policy Institute, Vales Lake Publishing, LLC, Pueblo West, Colorado, USA.

Lapola, D.M, Oyama, M.D. and Nobre, C.A. 2009. Exploring the range of climate biome projections for tropical South America: The role of CO2 fertilization and seasonality. Global Biogeochemical Cycles 23: 10.1029/2008GB003357.

Larsen, T.B. 2008. Forest butterflies in West Africa have resisted extinction … so far (Lepidoptera: Papilionoidea and Hesperioidea). Biodiversity and Conservation 17: 2833-2847.

Luoto, M. and Heikkinen, R.K. 2008. Disregarding topographical heterogeneity biases species turnover assessments based on bioclimatic models. Global Change Biology 14: 483-494.

Prugh, L.R., Hodges, K.E., Sinclair, R.E. and Brashares, J.S. 2008. Effect of habitat area and isolation on fragmented animal populations. Proceedings of the National Academy of Sciences USA 105: 20,770-20,775.

Randin, C.F., Engler, R., Normand, S., Zappa, M., Zimmermann, N.E., Pearman, P.B., Vittoz, P., Thuiller, W. and Guisan, A. 2009. Climate change and plant distribution: local models predict high-elevation persistence. Global Change Biology 15: 1557-1569.

Willis, K.J. and Bhagwat, S.A. 2009. Biodiversity and climate change. Science 326: 806-807.

Book reviewed by Madhav Khandekar

Climate Change in the 21st Century by Stewart J. Cohen with Melissa W. Waddell is one of the latest books on the most intensely debated scientific issue of our time, climate change. The book came out soon after the well-publicized Copenhagen meeting in December 2009, organized by the UNFCCC (United Nations Framework Convention on Climate Change) and the IPCC (Intergovernmental Panel on Climate Change). The Copenhagen meeting ended without any substantive agreement on reducing the greenhouse gases (GHGs) which have been identified by the IPCC and its adherents as responsible for recent warming of the earth’s surface and subsequent climate change. The book provides an alternative to the IPCC’s approach (on climate change) by suggesting an adaptation strategy, something that is now discussed by many scientists and policymakers.

The first two chapters of the book provide a background on the UNFCCC and the IPCC and the ultimate objective to get an international agreement on GHG

reduction. The emergence of adaptive governance in recent years at local and regional levels is described as an outgrowth of UNFCCC’s unsuccessful attempts to come to terms with developed vs developing nations on GHG targets. The adaptive governance approach is characterized as a ‘bottoms up’ approach to climate change rather than the ‘top down’ approach taken by UNFCCC and its ongoing process of negotiating world-wide GHG targets. This approach is further exemplified in chapter three using a case study for Barrow Alaska (located west of the Beaufort Sea at lat ~71N), a small community of a few thousand permanent residents. The climate change impact in Barrow and the North Slope is identified primarily through an intense storm of October 1963 and several other subsequent storms. This chapter, the longest in the book, discusses how the local and regional government initiatives helped develop adaptation strategies to minimize extreme weather impacts.

The next chapter of the book provides a framework for developing adaptive governance as a decentralized approach to climate change with community-based initiatives. The last chapter (five) provides a rationale for developing this theme and further summarizes alternative approaches like low carbon technology (e.g., solar electric power plants, wind turbines), geo-engineering (e.g., albedo enhancement by stratospheric sulpher injection) and related initiatives developed in recent years. The last few pages of the chapter are devoted to latest developments on emission targets, failure at Poznan ( Poland ) meeting in December 2008 on securing any agreement, impact of global economic melt-down and slow recovery, changes in political landscape in the US and subsequent changes in the US Climate Change Action Plans, leading up to the Copenhagen meeting. The book does not discuss the outcome of the Copenhagen meeting nor the failure of negotiations at the meeting due to refusal by developing nations (primarily Brazil, China and India) to go along with any GHG reduction targets being imposed by the western nations.

The book presents a refreshing look at the climate change issue and how to cope with future climate change impacts. This is a significant departure from the

IPCC’s mitigation approach, which has been stymied so far, due to lack of political will and many other socioeconomic parameters. The concept of a simple

adaptation strategy is now gaining traction and this book provides a useful background on how this can become more effective and more acceptable in the future. It is instructive to note a couple of commentaries on the book: 1. Prof Judith Curry, climate scientist, Georgia Institute of Technology USA: “In the wake of Copenhagen, this book couldn’t be more timely for those genuinely concerned about climate change and disappointed with the outcome of climate policies to date” 2. Prof Mathew Auer, International Environmental Policy, Indiana University USA: “Brunner and Lynch [book] offer a persuasive alternative to the ‘big science, big politics’ formula for combating global climate change”. Besides the example of climate change at Barrow Alaska, the book also provides examples of other locations and regions where climate change impacts are being tackled at local levels. In the Pacific Islands, the PEAC (Pacific ENSO Application Center) informed local decision-makers about impending drought from the intense 1997-98 El Nino and initiated suitable action on minimizing the drought impacts.

In Melbourne Australia, amid continuing drought, city officials and other professionals initiated action to make major amendments to local water policies. In Nepal, melted ice water from several glaciers caused significant accumulation of water in a nearby lake. The Government of Nepal, with the support of international donors, initiated a project in 1998 to lower the lake level by drainage, so as to prevent it from bursting and creating catastrophic loss of water and damage to property. These and other examples in the book are primarily geared towards documenting ‘global warming’ impacts as identified by the IPCC.

The reality of recent climate change is however, far more complex and does not conform to IPCC projections. In the Canadian Atlantic Provinces, the mean temperature has been declining for the past 25 years or more and the last ten years have witnessed heavier winter snow accumulation in many locales there. In the conterminous US, the sea-board States in southeastern US as well as some of the midwestern States have witnessed heavier winter snow accumulation in recent years. The past winter saw unusually heavy snow accumulation in Washington, the US Capital, which was paralyzed for almost a week in January 2010, with so many roads clogged with snow! There are many other examples in other regions of the earth which show glaring disparity between IPCC projections and climate reality of the last ten years or more. A discussion on the climate change reality and appropriate adaptive initiatives tailored to specific climate change impacts would have been a useful addition to the book. Notwithstanding the above minor exclusion, the book is a welcome addition to the plethora of books and documents on environment and climate change that are available at present. This book should be on a “must read” list of decision-makers at various levels of government in Canada.

Further, the book could provide a valuable guideline at future national and international meetings on climate change and emission targets. The book’s main message that it is time to take a closer look at adaptation strategy (Plan B) should now become the new mantra for coping with future climate change.

By Stephen Murgatroyd, The Nova Scotian

What a difference a year makes. This time last year the environmental movement was gearing up for a major breakthrough at the Copenhagen Climate Change Summit. With a combination of “doom and gloom” soothsayers - Ban ki Moon, Al Gore, Prince Charles, James Hansen, David Suzuki - and optimistic negotiators, it was clear that Copenhagen was being positioned as “the last chance” we had to save the planet.

We know what happened during the negotiations, however. Polluters couldn’t agree with the small islands and the developing world and the negotiations fell apart, with a compromise “let’s-look-as-if-we-might-save-the-planet” deal being signed off by a few countries at the end of a tough 10 days of negotiation.

Grief therapy

Since then the environmental movement has been going through a period of loss - grieving the loss of an ideal and hoping for a new reality that will culminate in a new global climate change negotiation in Brazil in December.

But the game is up. There will not be a meaningful commitment to climate change mitigation involving all of the leading polluters, especially the U.S., China, India and Canada. What is more, the general public in Canada, the U.S. and Britain are all signalling that climate change is less of a priority for them now than it was five years ago.

Just as the language has gone through significant change - from “global warming” through “climate change,” from “climate catastrophe” to the “climate challenge” - so now the environmental movement is going through its own metamorphosis. According to The Guardian (U.K.), “the economic case for global action to stop the destruction of the natural world is even more powerful than the argument for tackling climate change, a major report for the United Nations will declare this summer - a fact reinforced by the psychological, social and economic impacts of the BP oil spill in the Gulf of Mexico.”

One reason for this shift is money. Groups such as Conservation International and the Nature Conservancy are among the most trusted environmental “brands” in the world, pledged to protect and defend nature. Yet many of the green organizations meant to be leading the fight are busy securing funds from those who are also destroying the environment through mining and exploration. Sierra Club - the biggest green group in the U.S. - was approached in 2008 by the maker of Clorox bleach, which said that if the club endorsed its new range of “green” household cleaners, they would give it a percentage of the sales. The club’s corporate accountability committee said the deal created a blatant conflict of interest - but took it anyway. Money talks. Right now the money is saying that biodiversity and environmental impacts of pollution, deforestation, land use changes and other matters are more important than climate change.

A second reason is public opinion. The public are disaffected by all the talk about the need for a response to climate change and both the lack of action and the costs of the actions that need to be taken. In the U.K., where energy rationing over the next decade is a real possibility due to the now defeated government’s dithering on environmental policy, many are now balking at the rising costs of energy and the ugliness of the countryside blighted by wind turbines. In the U.S., public support for action on climate change is down from 46 per cent of the population to 36 per cent in just one year. Environmental groups no longer enjoy the wide support of the people when they focus on climate change.

A third reason is political reality. Climate change as a policy strategy in the U.S. and Canada is stuck and likely to be so for some time. The U.S. Senate has the Kerry-Lieberman bill to debate, but it is unlikely to pass. Canada has said it will follow the U.S. lead on a North American strategy, so Canada is also unlikely to do anything until the U.S. passes appropriate legislation.

However, major changes are taking place with respect to conservation, water, land use and air quality on both sides of the U.S.-Canada divide and serious attention to conservation and cleanup can be expected on both sides of the border following the BP spill. Environmental groups are already gearing up to lobby on these issues, dusting off old policies and approaches from the early 1990s. Both the U.S. and Canada are more likely to enact legislation on these issues than on transformative changes required to “stop” climate change.

Climate change problematic

The final reason that the environmental groups are shifting ground is that the science of climate change remains problematic. While some would argue that the core science demonstrating that the climate is changing and that this is due largely to the actions of people remains unchanged, the skeptics have gained sufficient ground over the last year to plant large trees of doubt. Worse, data from real world observations (as opposed to data from climate change models) provide opportunities for varying interpretations of the current state of the planet. The science is becoming a tough sell.

For all these reasons, the environmentalists will now focus more and more on environmental degradation and cleanup than on climate change -deforestation, water and land use will be the new focus for their work. Not a bad thing either.

By Roger Pielke Sr., Climate Science

On May 18 2010 I posted on a proposal to NSF that was highly rated each time it was submitted, but was rejected each of the three times it was submitted after further revisions were made.

The May post is ‘Is The NSF Funding Process Working Correctly?’

The NSF mission reads ‘The National Science Foundation Act of 1950 (Public Law 81-507) set forth NSF’s mission and purpose: To promote the progress of science; to advance the national health, prosperity, and welfare; to secure the national defense…

The Act authorized and directed NSF to initiate and support:

(1) basic scientific research and research fundamental to the engineering process,

(2) programs to strengthen scientific and engineering research potential,

(3) science and engineering education programs at all levels and in all the various fields of science and engineering,

(4) programs that provide a source of information for policy formulation,

(5) and other activities to promote these ends.

Over the years, NSF’s statutory authority has been modified in a number of significant ways. In 1968, authority to support applied research was added to the Organic Act. In 1980, The Science and Engineering Equal Opportunities Act gave NSF standing authority to support activities to improve the participation of women and minorities in science and engineering. Another major change occurred in 1986, when engineering was accorded equal status with science in the Organic Act.

NSF has always dedicated itself to providing the leadership and vision needed to keep the words and ideas embedded in its mission statement fresh and up-to-date. Even in today’s rapidly changing environment, NSF’s core purpose resonates clearly in everything it does: promoting achievement and progress in science and engineering and enhancing the potential for research and education to contribute to the Nation. While NSF’s vision of the future and the mechanisms it uses to carry out its charges have evolved significantly over the last four decades, its ultimate mission remains the same.

The title and Project Summary of our rejected proposal is “Collaborative Research: Sensitivity of Weather and Climate in the Eastern United States to Historical Land-Cover Changes since European Settlement” with the Project Summary

“The Earth’s weather and climate is strongly influenced by the properties of the underlying surface. Much of the solar energy that drives the atmosphere first interacts with the land or sea surface. Over land regions this interaction is modulated by surface characteristics such as albedo, aerodynamic roughness length, leaf area index (LAI), etc. As these characteristics change, either from anthropogenic or natural land-cover disturbances, the amount of energy reaching the atmosphere from the land surface, and thus weather and climate, is expected to change. The goal of this project is to determine the sensitivity of weather and climate to historical land-cover changes in the eastern United States since the arrival of European settlers. Regional Atmospheric Modeling System (RAMS) coupled with the Simple Biosphere (SiB) model, SiB-RAMS, will be used to perform a series of one-year ensemble simulations over the eastern United States with the present-day and several past land-cover distributions. The land-cover distributions will be based on the new Reconstructed Historical Land Cover and Biophysical Parameter Dataset developed by Steyaert and Knox (2008). The influence of the land-cover changes on temperature and precipitation will be examined and compared with that expected from CO2-induced climate change (IPCC 2007). The seasonality of the changes in precipitation and temperature due to land-cover change will be explored. Also, the relative importance of each land-cover biophysical parameter to the total simulated change in temperature and precipitation will be assessed.”

We have presented the letter from the Deputy Assistant Director regarding our request for reconsideration.

This letter is quite informative as it is a cursory, pro forma response without any detail. What it confirms is that program managers have considerable latitude in decision-making and can eliminate well reviewed projects if they differ from their priorities. The program managers decide what is “basic scientific research and research fundamental to the engineering process” rather than relying on the reviewers to determine this [of course, they can also select known biased reviewers if they want to reject a proposal].

Since the level of ratings of our proposal were high, the reason for the rejection is based on the program managers concluding that the role of land use change in the climate system is not a high research priority. Also, despite the NSF requirement listed their mission statement “to support activities to improve the participation of women and minorities in science and engineering”, the fact that woman (Dr. Lixin Lu) was the PI on the project was not discussed in the reconsideration (see letter part 1 here, part 2 here).

My recommendation to improve the process, which I presented in my May post was to “present ALL proposal abstracts, anonymous reviews of both accepted and rejected proposals and program managers decision letters (or e-mails) on-line for public access; present the date of submission and final acceptance (or rejection) of the proposal.

I also recommend they make easily available the list of all of the reviewers used during the year within each NSF program office. NSF program managers have considerable ability to slant research that they fund with insufficent transparency of the review process. This has become quite a problem in the climate science area where, as one example, in recent years they have elected to fund climate predictions decades into the future (e.g. see which was funded in part by the NSF; I will discuss specific examples of such funded projects by the NSF in a future post).

See post here.

By Anthony Watts, Watts Up With That

When I last wrote about the solar activity situation, things were (as Jack Horkheimer used to say) “looking up”. Now, well, the news is a downer. From the Space Weather Prediction Center (SWPC) all solar indices are down, across the board (below, enlarged here):

The radio activity of the sun has been quieter (below, enlarged here):

And the Ap Geomagnetic Index has taken a drop after peaking last month (below, enlarged here):

WUWT contributor Paul Stanko writes:

As has been its pattern, Solar Cycle 24 has managed to snatch defeat from the jaws of victory. The last few months of raw monthly sunspot numbers from the Solar Influences Data Analysis Center (SIDC) in Belgium are: January = 12.613, February = 18.5, March = 15.452, April = 7.000 and May = 8.484. After spending 3 months above the criteria for deep solar minimum, we’re now back in the thick of it.

The 13 month smoothed numbers, forecast values and implication for the magnitude of the cycle peak are as follows:

June 2009 had a forecast of 5.5, actual of 2.801, implied peak of 45.83

July 2009 had a forecast of 6.7, actual of 3.707, implied peak of 49.79

August 2009 had a forecast of 8.1, actual of 5.010, implied peak of 55.67

September 2009 had a forecast of 9.7, actual of 6.094, implied peak of 56.55

October 2009 had a forecast of 11.5, actual of 6.576, implied peak of 51.46

November 2009 had a forecast of 12.6, actual of 7.190, implied peak of 51.36

December 2009 had a forecast of 14.6, actual would require data from June.

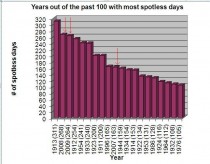

Solar Cycle 24 now has accumulated 810 spotless days. 820, which would require only 10 more spotless days, would mean that Cycle 24 was one standard deviation above the mean excluding the Dalton and Maunder Grand Minima.

One standard deviation is often an accepted criteria for considering an occurrence ‘unusual’.

Here are the latest plot from Paul Stanko (below, enlarged here):

The Scientific Alliance, June 10, 2010

Quite a lot, according to Friends of the Earth campaigner Kirtana Chandrasekaran in an interview on UK Radio 4’s Today programme this week. Her opportunity came when she was asked to comment on an interview with Professor Jonathan Jones of the Sainsbury laboratory in Norwich, in which he talked about the first field trials of two genetically modified potato varieties. Each variety contains a gene from a wild South American potato species, conferring resistance to the devastating disease late blight, caused by the mould Phytophthora infestans).

Once Professor Jones had explained the need for such trials - late blight is, after all, the disease which caused the Irish potato famine, which still results in worldwide losses worth some £3.5bn each year, with farmers in northern Europe needing to spray up to 15 times a season to control it - Ms Chandrasekaran had her opportunity to put FoE’s case.

The reason GM crops are not grown in the UK, she claimed, was not because of opposition to them (without so much as an apology to all those activists who campaign so hard against them). Instead, they are both hugely expensive and unnecessary, because conventional breeding has achieved better results already, and the last 20 years of plant biotechnology research has failed to deliver. But, for those not convinced by these arguments, she had a further one, that GM crops in general, and these potatoes in particular, carry ‘very high risks’.

These supposed risks are manifested in two ways: the potatoes could ‘contaminate’ other varieties, and they contain an ‘antibiotic gene’ which could pass to humans and increase resistance to a range of antibiotics. When pressed by the interviewer she confirmed that these potatoes could cause harm to humans. Such serious accusations deserve our full attention, so let’s deconstruct some of these scary arguments.

First, on the question of ‘contaminating’ other varieties, there are a number of reasons why potatoes are an almost perfect crop to work on if we want to minimise what is more correctly called horizontal gene flow. Potatoes, originating as they do from South America, have no natural relatives in Europe, so any cross-pollination can only take place with other cultivated potatoes. To pollinate each other, these must flower at the same time, but actually potatoes produce rather few flowers and small amounts of pollen which can only be carried significant distances by insects. In the unlikely event that pollination does occur, no hybrid plants result, because potatoes are propagated vegetatively, via small tubers (’seed’ potatoes). In actual fact, they are clones, but let’s not get into anything else which might scare people.

So other potatoes will not be ‘contaminated’, which leads us to the second point of what is seen as the primary danger, the presence of an ‘antibiotic gene’. What she obviously meant to say was antibiotic resistance gene, which might have been used as a selectable marker. In fact, it is very unlikely that this particular potato does contain such a gene, since there has been a move away from their use in recent years. This is largely because of the theoretical but minuscule risk of creating even higher levels of resistance among harmful bacteria (the primary causes actually being the general overuse of antibiotics, the failure of many people to complete courses of medication, and the past use of low levels of antibiotics as growth promoters in animal feed).

If GM potatoes are not as risky as Friends of the Earth might like to suggest, how about the argument that they are unnecessary, and that millions of pounds, euros and dollars has been spent creating transgenic plants when conventional breeding has got there first. This argument really defies commonsense. Genetic modification of plants is arguably the technology which has been accepted by farmers faster than any other. In 2009, 134 million hectares of GM crops were grown - from effectively zero 15 years ago - by 14 million farmers in 25 countries. Since they pay more for the privilege, we have to conclude that these farmers are either chronically stupid or that the transgenic varieties did in fact provide them with some benefits not available from conventional breeding.

In fact, to the average person, the benefits seem relatively modest, consisting almost entirely of herbicide tolerance and pest resistance in soy, maize, cotton and oilseed rape, which save farmers time, reduce their need to spray, and can give them larger harvests. Many other traits are in the pipeline, but the ones currently on the market obviously fulfil a need.

In the case of blight-resistant potatoes, there is indeed an existing level of natural resistance in some varieties, but these are not ones which are popular with consumers. And, if they were, the resistance would not be robust. P infestans exists in many different races which continue to evolve; the aim of the Sainsbury laboratory programme is to ultimately add multiple resistance genes to popular varieties to protect them from blight in the long term.

For the farmer, the benefit would be a saving of several hundred euros per hectare if spraying could be avoided, particularly in cool, wet seasons. And, although the tiny traces of fungicide which remain on potatoes are of no concern at all for human health, the fact remains that many consumers would be happy to buy potatoes which have not been sprayed. Despite the arguments of Friends of the Earth, organic producers of main crop potatoes are often forced to use copper sulphate sprays to control blight. They would not be doing this if saleable varieties which carried natural blight-resistance were already available.

So, do GM potatoes represent a very high risk? No. Are they unnecessary? No. Are they ‘hugely expensive’? Well, the R&D is indeed more costly than conventional breeding, but the final result seems to justify it, otherwise farmers would not buy the seeds and agricultural supply companies would stop development because they would have no market. Perhaps, then, Ms Chandrasekeran’s final argument was revealing. GM crops, she said are ‘profit friendly but not people friendly’.

Via such a glib but meaningless slogan, Friends of the Earth reveal their anti-business stance. First shooting themselves in the foot by accepting that GM crops are profitable while simultaneously having us believe they are both dangerous and not needed, they seem to be convinced that anything profitable is also in some way tainted. Rather ironic then, that the GM potato they criticise so vehemently, is being developed by a public sector research institute (although probably irredeemably damned by support from the Sainsbury family’s Gatsby Foundation). Is this the level of argument which FoE donors are supporting? Perhaps they should ask for their money back.

Please pass this newsletter on to your own networks and encourage other people to subscribe. Simply send us an email request, and your name will be added to the list.

By Steve Goddard

Over the last three years, Arctic Ice has gained significantly in thickness. The graph above (enlarged here) was generated by image processing and analysis of PIPS maps, and shows the thickness histogram for June 1 of each year since 2007.

The blue line represents 2008, and the most abundant ice that year was less than 1.5 metres thick. That thin ice was famously described by NSIDC as “rotten ice.” In 2009 (red) the most common ice had increased to more than 2.0 metres, and by 2010 (orange) the most common ice had increased to in excess of 2.75 metres thick.

We have seen a steady year over year thickening of the ice since the 2007 melt season. Thinner ice is more likely to melt during the summer, so the prognosis for a big melt looks much less likely than either of the previous two summers. More than 70% of the ice this year is thicker than 2.25 metres thick. By contrast, more than half of the ice was thinner than 2.0 metres in 2008.

So why did 2008 start out with so little thick ice? Because during the summer of 2007 much of the ice melted or was compressed by the wind. During the winter of 2007-2008, much of the remaining thick ice blew out into the North Atlantic and melted. So by the time that summer 2008 arrived, there was very little ice left besides rotten, thin ice. Which led to Mark Serreze’ famous “ice free North Pole bet.“

Can we find another year with similar ice distribution as 2010? I can see Russian ice in my Windows. Note in the graph below that 2010 is very similar to 2006.

2006 had the highest minimum (and smallest maximum) in the DMI record. Like 2010, the ice was compressed and thick in 2006.

Conclusion : Should we expect a nice recovery this summer due to the thicker ice? You bet ya. Even if all the ice less than 2.5 metres thick melted this summer, we would still see a record high minimum in the DMI charts. Mark Serreze has a different take for 2010: “Could we break another record this year? I think it’s quite possible,” said Mark Serreze of the National Snow and Ice Data Center in Boulder, Colorado.

Bookmark this post for reference in September.

“The report of my death was an exaggeration” - Mark Twain

-----------

Addendum By Steve Goddard 6/3/10:

Anyone betting on the minimum extent needs to recognize that summer weather can dramatically effect the behaviour of the ice. The fact that the ice is thicker now is no guarantee that it won’t shrink substantially if the summer turns out to be very warm, windy or sunny. Joe Bastardi believes that it will be a warm summer in the Arctic. I’m not a weather forecaster and won’t make any weather predictions.

By Marlo Lewis, CEI

By Amy Harder

NationalJournal.com

Should Congress strip the Environmental Protection Agency of its power to regulate carbon dioxide emissions?

This week, Sen. Lisa Murkowski, R-Alaska, is expected to call for a vote on a disapproval resolution that would strip the EPA of that authority. Other senators are proposing slightly different approaches. Sen. Jay Rockefeller, D-W.Va., would delay the EPA’s carbon dioxide regulations for two years. Meanwhile, Sens. Robert Casey Jr., D-Pa., and Thomas Carper, D-Del., would allow EPA to regulate only the largest emitters of greenhouse gases, such as power plants and oil refineries. They would exempt small businesses and farms.

Should Congress endorse one of these options or applaud EPA for tackling a climate-change problem?

Cuff ‘Em

By Marlo Lewis

Overturning EPA’s endangerment finding is a constitutional imperative. Unless stopped, EPA will be in a position to determine the stringency of fuel economy standards for the auto industry, set climate policy for the nation, and even amend the Clean Air Act - powers never delegated to the agency by Congress.

Worse, America could end up with a pile of greenhouse gas regulations more costly and intrusive than any climate bill or treaty the Senate has declined to pass or ratify, yet without the people’s representatives ever voting on it.

Sen. Lisa Murkowski’s resolution of disapproval (S.J.Res.26) would nip this mischief in the bud by overturning the endangerment finding. The resolution puts a simple question squarely before the Senate: Who shall make climate policy - lawmakers who must answer to the people at the ballot box or politically unaccountable bureaucrats, trial lawyers, and activist judges appointed for life?

Precisely because S.J.Res.26 would restore political accountability to climate policy making, regulatory zealots are waging smear campaigns against it. Climate Progress calls it “polluter crafted” (impossible, because the form and language of the resolution are fixed by the Congressional Review Act). MoveOn.Org warns the resolution condemns many Americans to “smoke the equivalent of a pack a day just from breathing the air” (nonsense - just one cigarette delivers 12-27 times the daily dose of fine particulate matter that non-smokers get in cities with the most polluted air). Environmental Defense Action Fund claims the resolution will give corporate polluters a “bailout” (also impossible, because S.J.Res.26 is not a tax or spending bill).

Sen. Barbara Boxer (D-Calif.) tries to equate S.J.Res.26 with a hypothetical vote to overturn the Surgeon General’s famous report linking cigarette smoking to cancer. We’re supposed to be scandalized that Congress would attempt to repeal science and keep us in the dark about threats to our health and welfare. This too is calumny.

First, the Surgeon General’s report was purely an assessment of the medical literature. It did not even offer policy recommendations. If the endangerment finding were simply one agency’s review of the science, Congress would have no business voting on it either. Unlike the Surgeon General’s report, the endangerment finding is both trigger and precedent for sweeping policy changes Congress never approved.

Second, although some oppose the endangerment finding on scientific grounds, S.J.Res.26 neither takes nor implies a position on climate science. The resolution would overturn the “legal force and effect” of the endangerment finding, not its reasoning or conclusions. The resolution is a referendum not on climate science but on the constitutional propriety of EPA making climate policy without new and specific statutory guidance from Congress.

Former EPA Administrator Russell Train contends that Congress already signed off on whatever greenhouse gas regulations EPA adopts. When? Why, back in 1970 - decades before global warming became a political issue - when Congress enacted the Clean Air Act. Congress authorized EPA to evaluate individual pollutants, and established a scientific criterion as the sole trigger for action: whether a pollutant “may reasonably be anticipated to endanger” public health or welfare. EPA bases its greenhouse gas regulations on its endangerment finding, so the Clean Air Act is working “as Congress intended,” Train concludes.

But all this proves is that EPA has jumped through the requisite procedural hoops, which nobody disputes. That in no way demonstrates that Congress meant to regulate greenhouse gases through the Clean Air Act. Train ignores the obvious. Congress did not design the Clean Air Act to be a framework for climate policy, has never voted for the Act to be used as such a framework, and has never approved the far-reaching regulatory ramifications of EPA’s endangerment finding.

As even EPA admits, applying the Act “literally” (i.e. lawfully, statutorily) to CO2 leads to “absurd results.” For example, EPA and its state counterparts would have to process an estimated 41,000 pre-construction permits annually (instead of 280) and 6.1 million operating permits annually (instead of 14,700). Such workloads vastly exceed agencies’ administrative resources. Ever-growing backlogs would paralyze environmental enforcement, block new construction, and thrust millions of firms into legal limbo.

Train praises Administrator Lisa Jackson for taking a “measured approach” and demonstrating her “sensitivity to economic concerns” by exempting, for six years, all but the largest CO2 emitters from permitting requirements. But the Clean Air Act nowhere gives the Administrator the authority to suspend or revise the permitting programs’ applicability thresholds. EPA’s so-called Tailoring Rule is actually an amending rule.

To pound the square peg of climate policy into the round hole of the Clean Air Act, EPA has to play lawmaker and effectively change the statute. This breach of the separation of powers only compounds the constitutional crisis inherent in EPA’s hijacking of fuel economy regulation and climate policymaking.

The importance of the vote on S.J.Res.26 is difficult to exaggerate. Nothing less than the integrity of our constitutional system of separated powers and democratic accountability hangs in the balance. See post here.